File Transfer & Information Security Blog

Featured Articles

-

Podcast: Why Cyber Hygiene Is So Important

Greg Mooney | | Security

In this podcast, Dr. Arun Vishwanath joined the podcast to discuss the theory of cyber hygiene, what it is, and why it needs to become part of everyone's vocabulary.

-

A VPN is NOT A Security Tool! It's in the Name

Michael O'Dwyer | | IT Insights | Security

Some may argue that VPNs enhance security (encrypted connections etc.) but their core aim is privacy – it’s in the name – virtual private network.

-

FERPA, HIPAA, and Compliance in File Transfer and Storage

Andrew Evans | | Cloud | Security

With the rising risk of a data breach, regulatory compliance is not optional. Avoid falling out of compliance with FERPA and HIPAA through proper encryption, user management, network protection, and the use of managed file transfer.

-

What is Electronic File Transfer (EFT)?

Greg Mooney | | Automation | File Transfer | Security

Electronic file transfer, abbreviated EFT is a procedure that follows an electronic format and protocol to exchange different types of data files.

-

Mobile File Transfers On The Go

Adam Bertram | | File Transfer | Security

Transferring files quickly and securely in a modern business environment is a challenge with various devices, methods, and environments an individual may find themselves in.

-

Podcast: Continuous Integration and Deployment

One of the favorite techniques for faster software delivery is the so-called continuous integration and deployment, or CI/CD for short.

-

Mobile Telephony is Dying - Here's Why

Arun Vishwanath | | IT Insights

Verizon, AT&T, T-Mobile–I hope you are reading this. Mobile telephony, your primary business model of enabling phone calls and text messaging, is dying.

-

Introducing the MOVEit Customer Validation Program

Make your voice heard and influence the future of MOVEit with our CVP program! Read on for the full details about this program and information on how can get involved.

-

What is an AD FS Server?

Setting up an AD FS server can be difficult, as there are many options and configurations that you need to be aware of.

-

4 Ways IT Can Prepare for Employees Returning to the Office

Michael O'Dwyer | | IT Insights

As some companies are considering reopening, the traditional office setup is required again as employees cease working from home. How will IT handle it? What will the office look like post-COVID?

-

Podcast: Cybersecurity in the Automotive Industry

It is no secret that with rising digitalization, the risks of hacking, spying, and stealing data online went up—a focus point of the development of cybersecurity in the automotive industry.

-

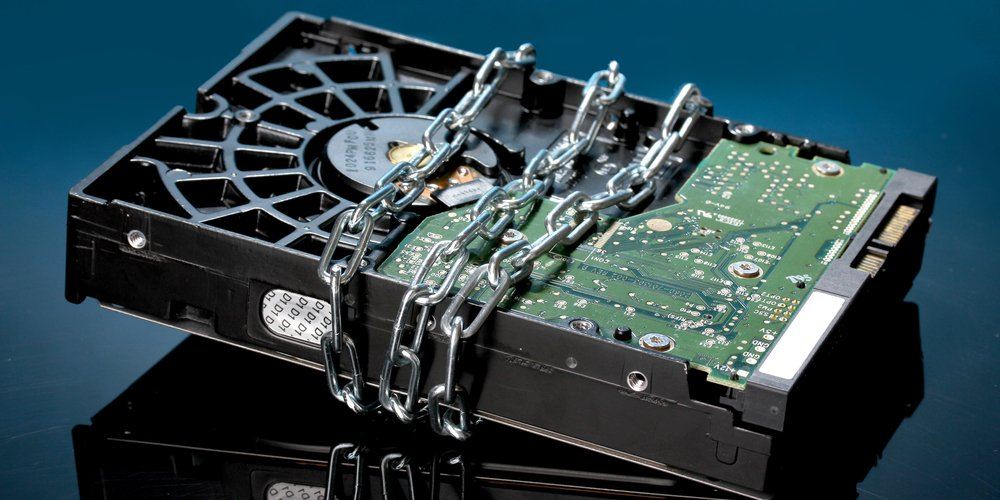

Cyber Security Insurance and Minimizing Risk in the Cloud

Gwen Luscombe | | Cloud | Security

There’s a lot of misconceptions around the cloud and liability. Organizations often assume risk is transferred when data moves to a third party.

-

What Is FERPA and What Are the Necessary Security Controls?

Greg Mooney | | Security

The right to privacy for individuals impacts just about every industry, and education is no different.

-

5 Data Security and Compliance Issues in Higher Education

Cyberattacks versus colleges and universities are undeniably lucrative for attackers.

-

What Is Non-Repudiation and How Can Managed File Transfer Help?

Greg Mooney | | File Transfer | Security

Talk about an awkward situation—you and a customer collaborate online, sharing a view of a contract and trying to agree on the payment schedule.

-

Data Security In The Cloud: Part 2

Arun Vishwanath | | Cloud | File Transfer | Security

Vulnerabilities in cloud-sharing services stem from the usage of multiple cloud services because of which users need to keep adapting and adjusting their exceptions.

-

Managed File Transfer As SaaS Vs. On-Prem

Greg Mooney | | File Transfer | Security

Keeping files and data secure when more users are working remotely is, of course, a concern. That's why a SaaS managed file transfer solution is critical.

-

Why IT Automation is the Backbone of Every DevOps Transformation

Jeff Edwards | | Automation | IT Insights

DevOps! Over the past half-decade, the illustrious marriage between Development and Operations has simultaneously become the biggest buzzword in tech since ~The Cloud~, and a critical piece of software engineering and delivery methodology. But to many, the DevOps...

-

Data Security in the Cloud: Part 1

Arun Vishwanath | | Cloud | Security

The adoption of public cloud computing makes user data less secure. And it's not for the reasons most in IT realize.

-

Ensuring Secure File Transfers from Mobile Devices

Michael O'Dwyer | | File Transfer | Security

Like it or not, today’s world is a connected one, and data is shared at an alarming rate between mobile device users, company networks, and cloud services (including social networks).