File Transfer & Information Security Blog

Featured Articles

-

Getting Your CEO to Say Yes to the Cloud

Doug Barney | | Cloud

Convincing the CEO that a cloud-first and cloud migration strategy makes economic and technological sense.

-

9 Simple, Yet Little Known, Secrets to Secure Cloud Transfer

Victor Kananda | | Cloud

Check out nine of the best cloud file-sharing security practices, along with lists of examples of what IT systems managers can do next.

-

Data Protection Day, Data Privacy Day, or Data Privacy Week?

Europe has long been at the forefront of data protection, years before the creation of Data Protection Day. Today, Data Protection Day has become a vital part of the data privacy movement in other countries, like the United States.

-

GDPR Will Soon be Everywhere as Canada Preps its Own Version

Canada is the latest GDPR-like entrant and drafted the Canadian Consumer Protection Act.

-

Avoiding the Insane Cost of a Data Breach

Doug Barney | | File Transfer | Security

How much do lost files and records really cost?

-

Government Data Security Starts with File Protection

Doug Barney | | File Transfer | Security

All the firewalls and anti-malware solutions globally can’t protect this data when it is emailed around at near-spam levels. And with governmental organizations coming in all shapes and sizes, so do the threats.

-

Everything You Need to Know About Protecting Legal Files

Doug Barney | | File Transfer | Security

The secure transfer of legal files is crucial to maintaining lawyer-client privilege and can be done effectively and securely with a Managed File Transfer (MFT).

-

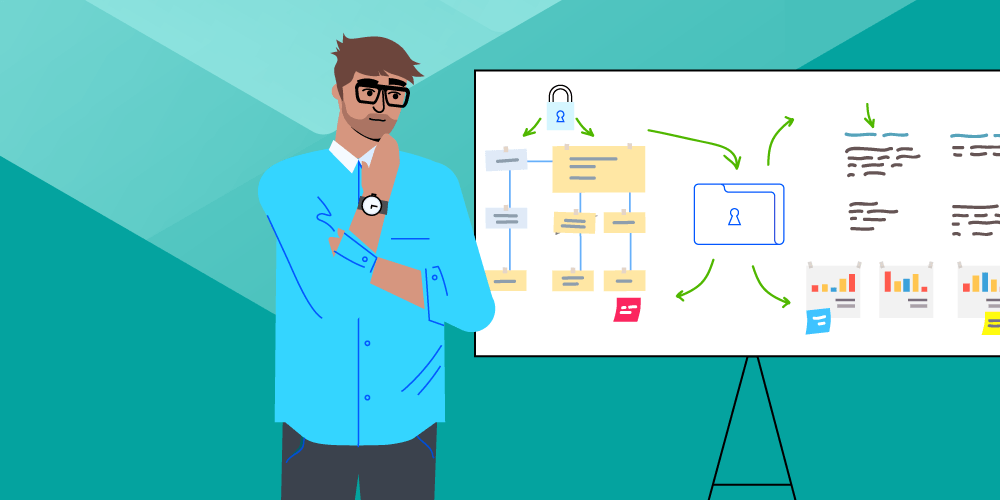

How Managed File Transfer (MFT) Fits In Your Cybersecurity Strategy

Joseph Barringhaus | | File Transfer | Security

Even after investing in everything — crossing all the T's, and dotting the i's, — executives will always feel like there is still something missing. Many wish that there was an extra mile or two they could go to secure their systems. That's where Managed File Transfer comes in.

-

Health Clinic Files Get from There to Here with no Wrong Turns

Doug Barney | | Automation | File Transfer

MOVEit is the perfect medicine for safe, efficient and compliant file transfers for Hattiesburg Clinic in South Mississippi.

-

How To Avoid File Sharing Inefficiencies With File Transfer Automation

Doug Barney | | Automation | File Transfer

In an IT ecosystem marred by hacking occurrences and where sensitive, high-volume, compliance-regulated files are always on the move, efficiency in file transfer means so much more. It means automation. It means keeping data moving reliably, seamlessly, and securely.

-

What's New in MOVEit 2021.1

Progress is pleased to announce MOVEit 2021.1, with incremental feature improvements that extend accessibility, scalability, and usability in the industry’s leading secure Managed File Transfer (MFT) software.

-

File Sharing Security Risks and How To Mitigate Them With Managed File Transfer (MFT)

Joseph Barringhaus | | IT Insights

Let's play a game. Close one eye if you feel your existing file sharing mechanisms and technologies are lacking the necessary security, control and governance angles.

-

Secure File Transfer: A Day in the Life of a File

What does a file go through to complete a secure, efficient journey?

-

Making File Transfer Safe and Easy: Just Say No to Scripting

Doug Barney | | Automation | File Transfer

File transfers are too important to trust to just any old script. Ensure speed and secure transfers with automation.

-

Avoiding the GDPR Data Transfer Pothole – Don’t Let Data Get Lost or Stolen During Transit

Doug Barney | | File Transfer | Security

GDPR is now three-years old, and even the largest companies are still regularly caught in its snares. And those that haven't yet run into trouble are maybe more vulnerable than they think. In this blog, learn more about data protection and GDPR compliance.

-

Data Protection is Not Complete Without a Secure Managed File Transfer (MFT) Software

Doug Barney | | File Transfer | Security

Data threats are increasing every day and smart IT pros are racing to not just keep up but get ahead. One area many neglect is secure file transfer.

-

What Are the Top Methods for Secure File Transfer – and Which Ones Don't Work

Doug Barney | | File Transfer | Security

Just because a tool calls itself Secure File Transfer doesn't make it so. Many so-called secure solutions are in fact only partially secure – and sometimes barely secure. So what are the top methods to transfer files securely?

-

Three Keys to Healthcare IT: HIPAA, Zero Trust and Ensuring File Transfers Are Secure

The bar for security in Healthcare IT is so high it takes Olympic-class efforts to clear it. Securing data is the most critical hurdle. So where is your most sensitive data? In files, of course. And it is these files that fly about like seagulls at the fishing pier....

-

How to Automate and Integrate with Managed File Transfer

Joseph Barringhaus | | Automation

Manually transferring files through legacy protocols such as FTP is a painful memory you would probably like to forget. That's where Automation comes into play.

-

What is Secure File Transfer?

Doug Barney | | File Transfer | Security

Secure File Transfer is as simple as it sounds, the secure transfer of files. But the real questions revolve around why you need to securely transfer files, what happens if you don’t, and how the heck can we do it properly?